A discussion of how to do Computer Science well, particularly writing code and architecting program solutions.

Showing posts with label link. Show all posts

Showing posts with label link. Show all posts

Saturday, March 23, 2019

Repost: Code Smells ... Is concurrency natural?

Writing parallel code is not considered easy, but it can be a natural approach to some problems for novices. When a beginner wants something to happen twice concurrently, the reasonable thing would be to do what works once, a second time. Instead, this may conflict with other constructs of the language, such as main() or having to create threads. See more here.

Friday, September 14, 2018

Is C low level?

A recent ACM article, C is Not a Low-Level Language, argues that for all of our impressions that C is close to hardware, it is not actually "low-level". The argument is as follows, C was written for the PDP-11 architecture and at the time, it was a low-level language. As architectures have evolved, C's machine model has diverged from hardware, which has forced processor design to add new features to attain good performance with C, such as superscalar for ILP and extensive branch prediction.

Processors must also maintain caches to hide the memory latency, which require significant logic to maintain coherence and the illusion that the memory is shared between the threads of a process. Furthermore, the compiler is also called upon to find optimization opportunities that may be unsound and definitely require programmer years to implement.

The author repeatedly contrasts with GPUs, while noting that they require very specific problems, or "at the expense of requiring explicitly parallel programs". If you were not keeping track, a GPU requires thousands of threads to match the CPU's performance. The author calls for, "A processor designed purely for speed, not for a compromise between speed and C support, would likely support large numbers of threads, have wide vector units, and have a much simpler memory model." Which generally sounds like the GPU design.

I appreciate the callouts to C's shortcomings, which it certainly has. The notion that C has driven processor design is odd, yet it does reflect the fact that processors are designed to run current programs fast. And with the programs being written in either C or a language built on C, that forces many programs into particular patterns. I even spent some time in my PhD studies considering a version of this problem: how do you design a new "widget" for the architecture if no programs are designed for widgets to be available?

All to say, I think C is a low-level language, and while its distance from hardware may be growing, there is nothing else beneath it. This is a gap that needs to be addressed, and by a language that has explicit parallel support.

Processors must also maintain caches to hide the memory latency, which require significant logic to maintain coherence and the illusion that the memory is shared between the threads of a process. Furthermore, the compiler is also called upon to find optimization opportunities that may be unsound and definitely require programmer years to implement.

The author repeatedly contrasts with GPUs, while noting that they require very specific problems, or "at the expense of requiring explicitly parallel programs". If you were not keeping track, a GPU requires thousands of threads to match the CPU's performance. The author calls for, "A processor designed purely for speed, not for a compromise between speed and C support, would likely support large numbers of threads, have wide vector units, and have a much simpler memory model." Which generally sounds like the GPU design.

I appreciate the callouts to C's shortcomings, which it certainly has. The notion that C has driven processor design is odd, yet it does reflect the fact that processors are designed to run current programs fast. And with the programs being written in either C or a language built on C, that forces many programs into particular patterns. I even spent some time in my PhD studies considering a version of this problem: how do you design a new "widget" for the architecture if no programs are designed for widgets to be available?

All to say, I think C is a low-level language, and while its distance from hardware may be growing, there is nothing else beneath it. This is a gap that needs to be addressed, and by a language that has explicit parallel support.

Friday, January 19, 2018

The Importance of Debugging

How do you teach students about debugging? To have The Debugging Mind-Set? Can they reason about possible causes of incorrect behavior?

For the past year, I have been revising material to help students learn about using gdb to assist in debugging, which is an improvement over the "printf-based" methods previously. And while this approach is usually used when the program has crashed from a segfault, many students are stymied when the problem is incorrect behavior rather than invalid behavior.

When their program crashes, they usually appreciate that gdb can show them what line of code / assembly has crashed. But how can a student "debug" incorrect behavior? Many try the "instructor" debugging method (they try this too when the code is crashing), where they either present their code or describe the basics of what they have done and ask us, as an oracle, what is wrong. I try to offer questions that they need to answer about their code. Sometimes the student follows well and this is valuable guidance for him or her to solve the behavior issue.

Other times I have asked these questions, trying to build up a set of hypotheses to test and investigate, and the student effectively rejects them. Not for being wrong, but for not clearly being the answer. They have the subconscious idea that their code is failing for reason X, which was their intuitive guess (these guesses are a good start). But the idea that they just do not know enough and need to collect more data is not grasped.

You are a doctor when debugging. Sometimes the patient gives clear symptoms. And other times, you need to run more tests. Again, thankfully, usually if an instructor recommends running a certain test, the data gleaned is enough to guide them through the diagnosis. Students appreciate when this happens; however, there is creativity in considering other possibilities and sometimes that possibility requires being open to everything (see TNG Finale).

This semester I am co-teaching Operating Systems. We have told the students that you have to know how to debug, as sometimes printf is what you have to debug. And other times, to quote James Mickens, "I HAVE NO TOOLS BECAUSE I’VE DESTROYED MY TOOLS WITH MY TOOLS." So in the dystopian, real-world, you need all the debugging tools and techniques you can get.

For the past year, I have been revising material to help students learn about using gdb to assist in debugging, which is an improvement over the "printf-based" methods previously. And while this approach is usually used when the program has crashed from a segfault, many students are stymied when the problem is incorrect behavior rather than invalid behavior.

When their program crashes, they usually appreciate that gdb can show them what line of code / assembly has crashed. But how can a student "debug" incorrect behavior? Many try the "instructor" debugging method (they try this too when the code is crashing), where they either present their code or describe the basics of what they have done and ask us, as an oracle, what is wrong. I try to offer questions that they need to answer about their code. Sometimes the student follows well and this is valuable guidance for him or her to solve the behavior issue.

Other times I have asked these questions, trying to build up a set of hypotheses to test and investigate, and the student effectively rejects them. Not for being wrong, but for not clearly being the answer. They have the subconscious idea that their code is failing for reason X, which was their intuitive guess (these guesses are a good start). But the idea that they just do not know enough and need to collect more data is not grasped.

You are a doctor when debugging. Sometimes the patient gives clear symptoms. And other times, you need to run more tests. Again, thankfully, usually if an instructor recommends running a certain test, the data gleaned is enough to guide them through the diagnosis. Students appreciate when this happens; however, there is creativity in considering other possibilities and sometimes that possibility requires being open to everything (see TNG Finale).

This semester I am co-teaching Operating Systems. We have told the students that you have to know how to debug, as sometimes printf is what you have to debug. And other times, to quote James Mickens, "I HAVE NO TOOLS BECAUSE I’VE DESTROYED MY TOOLS WITH MY TOOLS." So in the dystopian, real-world, you need all the debugging tools and techniques you can get.

Friday, April 21, 2017

Repost: What Makes a Program Elegant?

In a recent issue of the Communications of the ACM, there was a short article titled, What Makes a Program Elegant? I found it an interesting discussion that has summarized well the characteristics in elegant programming: minimality, accomplishment, modesty, and revelation. Revelation is one that I had not considered before, but I think it is most important of all. There are some code sequences that I have written, which the elegance has rested most of all on its revelation. Using and showing some aspect of computers and programming that I have never seen before, or revealing that there is a modest way to accomplish something new or differently.

Monday, February 20, 2017

Repost: Learn by Doing

I want to take a brief time to link to two of Mark Guzdial's recent posts. Both including an important theme in teaching. Students learn best by doing not hearing. Oddly students commonly repeat this misconception. If I structure our class time to place them as the ones doing something, rather than me "teaching" by speaking, the appraisal can be that I did not teach. They may not dispute that they learned, but I failed to teach them.

Students learn when they do, not just hear. And Learning in MOOCs does not take this requirement into account.

I have to regularly review these points. So much so that I was able to give them to a group of reporters last week (part of new faculty orientation, but still).

Students learn when they do, not just hear. And Learning in MOOCs does not take this requirement into account.

I have to regularly review these points. So much so that I was able to give them to a group of reporters last week (part of new faculty orientation, but still).

Thursday, June 30, 2016

Computer Scientists and Computers Usage

This post is built on a discussion I had with Dr. Thomas Benson and the recent blog post by Professor Janet Davis, I've got a POSSE. Less directly, Professor Mark Guzdial has written several recent blog posts about parents wanting CS in high school, most recently this (where the point is raised that not everyone knows what this is).

What is it to be a computer scientist? Is it just software development? When I was first looking at colleges and I knew I wanted to program, I saw three majors that seemed appropriate: Computer Science, computer programming, and game development. And from my uninformed perspective, Computer Science (CS) initially seemed the least applicable. This was before Wikipedia, so how would one know what CS is?

Michael Hewner's PhD defense was a study of how undergrads perceive the field, as they learn and progress through the curriculum, do their views change? I know my views changed; for example, functional programming was a complete unknown before matriculating. By the completion of my undergraduate degree, I perceived that Computer Science has three pillars: systems, theory, and application. I still view CS primarily from a programmer's lens and not a big tent view.

Indirectly, the Economist wrote about Programming Boot Camps where college grads go back to get a training in programming; however, "Critics also argue that no crash course can compare with a computer-science degree. They contend that three months’ study of algorithms and data structures is barely enough to get an entry-level job." I both agree and disagree. There is a further transformation of the economy coming whereby workers will . One PL (programming language) researcher recently estimated that Excel is the most common programming language. Can the worker using Excel do more or do it faster with VB or python or ...?

Are there nuances to the study of Computer Science in which students can focus further? Clearly there are with the mere presence of electives. Georgia Tech even groups similar electives into "threads". Besides just electives, under a programmer-centric view of CS, software development involves more than just the strict programming aspect. Even if CS is the "programming", a written program requires software engineering to maintain and manage its development and designers to prepare the UI (etc).

Thus CS might evolve more toward having a pre-CS major, and after the first two(?) years, students can then declare as Computer Science or Software Engineering or Human Computer Interaction or .... So this is both less and more than Georgia Tech's threads, but an approach with similarities. In common, this development of CS major(s) balances a concern of whether students have the fundamentals to succeed in areas related (i.e., programming, software development, and design) to their actual major, while allowing some greater depth and specialization.

This step is a maturing of the discipline. No long just one major, but a school of majors. CMU offers BS in CS, along with three other interdisciplinary majors. Georgia Tech offers CS (with 8-choose-2 threads) and Computational Media. Rose-Hulman offers both CS and Software Engineering. (I am only citing the programs that I know about, not an exhaustive list).

What is it to be a computer scientist? Is it just software development? When I was first looking at colleges and I knew I wanted to program, I saw three majors that seemed appropriate: Computer Science, computer programming, and game development. And from my uninformed perspective, Computer Science (CS) initially seemed the least applicable. This was before Wikipedia, so how would one know what CS is?

Michael Hewner's PhD defense was a study of how undergrads perceive the field, as they learn and progress through the curriculum, do their views change? I know my views changed; for example, functional programming was a complete unknown before matriculating. By the completion of my undergraduate degree, I perceived that Computer Science has three pillars: systems, theory, and application. I still view CS primarily from a programmer's lens and not a big tent view.

Indirectly, the Economist wrote about Programming Boot Camps where college grads go back to get a training in programming; however, "Critics also argue that no crash course can compare with a computer-science degree. They contend that three months’ study of algorithms and data structures is barely enough to get an entry-level job." I both agree and disagree. There is a further transformation of the economy coming whereby workers will . One PL (programming language) researcher recently estimated that Excel is the most common programming language. Can the worker using Excel do more or do it faster with VB or python or ...?

Are there nuances to the study of Computer Science in which students can focus further? Clearly there are with the mere presence of electives. Georgia Tech even groups similar electives into "threads". Besides just electives, under a programmer-centric view of CS, software development involves more than just the strict programming aspect. Even if CS is the "programming", a written program requires software engineering to maintain and manage its development and designers to prepare the UI (etc).

Thus CS might evolve more toward having a pre-CS major, and after the first two(?) years, students can then declare as Computer Science or Software Engineering or Human Computer Interaction or .... So this is both less and more than Georgia Tech's threads, but an approach with similarities. In common, this development of CS major(s) balances a concern of whether students have the fundamentals to succeed in areas related (i.e., programming, software development, and design) to their actual major, while allowing some greater depth and specialization.

This step is a maturing of the discipline. No long just one major, but a school of majors. CMU offers BS in CS, along with three other interdisciplinary majors. Georgia Tech offers CS (with 8-choose-2 threads) and Computational Media. Rose-Hulman offers both CS and Software Engineering. (I am only citing the programs that I know about, not an exhaustive list).

Wednesday, March 9, 2016

Repost: How I spent my time at SIGCSE

When I attend a conference, I try to prepare blog posts detailing the presentations that I see and other items of objective content. More important are the people in attendance. I spend more time meeting with colleagues than I do actually sitting in the sessions. Before you are shocked, understand that much of our conversation are about these sessions. Depending on the conference there can be between 2 and 10? sessions occurring concurrently. I cannot be in 2, let alone 10, places at once, so instead we sample (as in the appearance of randomly selecting) the sessions and then discuss.

I met Janet Davis, who now heads the CS program at Whitman College, during my first time at SIGCSE. I value her different perspective and always try to seek her out at some point during the conference.

These themes merge in her blog post that shows some idea as to how each day is organized and how the sessions (papers, panels, etc) play a part in a very busy schedule.

I met Janet Davis, who now heads the CS program at Whitman College, during my first time at SIGCSE. I value her different perspective and always try to seek her out at some point during the conference.

These themes merge in her blog post that shows some idea as to how each day is organized and how the sessions (papers, panels, etc) play a part in a very busy schedule.

Wednesday, January 13, 2016

Repost: Avoid Panicking about Performance

In a recent post, another blogger related how a simple attempt to improve performance nearly spiraled out of control. The lesson is that always measure and understand your performance problem before attempting any solution. Now, the very scope of your measurements and understanding can vary depending on the complexity of your solution. And when your "optimizations" have caused the system to go sideways, it is time to take a careful appraisal of whether to revert or continue. I have done both, and more often have I wished that I reverted rather than continued. Afterall, it is better for the code to work slowly rather than not work.

Again, always measure before cutting.

Again, always measure before cutting.

Thursday, December 17, 2015

Teaching Inclusively in Computer Science

When I teach, I want everyone to succeed and master the material, and I think that everyone in the course can. I only have so much time to work with and guide the students through the material, so how should I spend this time? What can I do to maximize student mastery? Are there seemingly neutral actions that might impact some students more than others? For example, before class this fall, I would chat with the students who were there early, sometimes about computer games. Does those conversations create an impression that "successful programmers play computer games"? To these questions, I want to revisit a pair of posts from the past year about better including the students.

The first is a Communications of the ACM post from the beginning of this year. It listed several seemingly neutral decisions that can bias against certain groups. Maintain a tone of voice that suggests every question is valuable and not "I've already explained that so why don't you get it". As long as they are doing their part in trying to learn, then the failure is on me the communicator.

The second is a Mark Guzdial post on Active Learning. The proposition is that using traditional lecture-style advantages the privileged students. And a key thing to remember is that most of us are the privileged, so even though I and others have "succeeded" in that setting, it may have been despite the system and not because of the teaching. Regardless of the instructor, the teaching techniques themselves have biases to different groups. So if we want students to master the material, then perhaps we should teach differently.

Active learning has a growing body of research that shows using these teaching techniques help more students to succeed at mastering a course, especially the less privileged students. Perhaps slightly less material is "covered", but students will learn and retain far more. Isn't that better?

The first is a Communications of the ACM post from the beginning of this year. It listed several seemingly neutral decisions that can bias against certain groups. Maintain a tone of voice that suggests every question is valuable and not "I've already explained that so why don't you get it". As long as they are doing their part in trying to learn, then the failure is on me the communicator.

The second is a Mark Guzdial post on Active Learning. The proposition is that using traditional lecture-style advantages the privileged students. And a key thing to remember is that most of us are the privileged, so even though I and others have "succeeded" in that setting, it may have been despite the system and not because of the teaching. Regardless of the instructor, the teaching techniques themselves have biases to different groups. So if we want students to master the material, then perhaps we should teach differently.

Active learning has a growing body of research that shows using these teaching techniques help more students to succeed at mastering a course, especially the less privileged students. Perhaps slightly less material is "covered", but students will learn and retain far more. Isn't that better?

Wednesday, December 16, 2015

PhD Defense - Diagnosing performance limitations in HPC applications

Kenneth Czechowski defended his dissertation work this week.

He is trying to develop a science to the normal art of diagnosing low-level performance issues, such as processing a sorted array and i7 loop performance anomaly. I have much practice with this art, but I would really appreciate having more formalism to these efforts.

One effort is to try identifying the cause of performance issues using the hardware performance counters. These counters are not well documented and so the tools are low-level. Instead, develop a meta tool to intelligently iterate over the counters thereby conducting a hierarchical event-based analysis, starts with 6 performance counters and then iterates on more detailed counters that relate to the performance issue. Trying to diagnose why the core is unable to retire the full bandwidth of 4 micro-ops per cycle.

Even if a tool can provide measurements of specific counters that indicate "bad" behavior, the next problem is that observing certain "bad" behaviors, such as bank conflicts, do not always correlate to performance loss, as the operation must impact the critical path.

The final approach is to take the program and build artificial versions of the hot code, such as removing the memory or compute operations from the loop body. For some applications, several loops account for most of the time. Then the loops can be perturbed in different ways that force certain resources to be exercised further. For example, the registers in each instruction are scrambled so that the dependency graph is changed to either increase or decrease the ILP while the instruction types themselves are unchanged.

He is trying to develop a science to the normal art of diagnosing low-level performance issues, such as processing a sorted array and i7 loop performance anomaly. I have much practice with this art, but I would really appreciate having more formalism to these efforts.

One effort is to try identifying the cause of performance issues using the hardware performance counters. These counters are not well documented and so the tools are low-level. Instead, develop a meta tool to intelligently iterate over the counters thereby conducting a hierarchical event-based analysis, starts with 6 performance counters and then iterates on more detailed counters that relate to the performance issue. Trying to diagnose why the core is unable to retire the full bandwidth of 4 micro-ops per cycle.

Even if a tool can provide measurements of specific counters that indicate "bad" behavior, the next problem is that observing certain "bad" behaviors, such as bank conflicts, do not always correlate to performance loss, as the operation must impact the critical path.

The final approach is to take the program and build artificial versions of the hot code, such as removing the memory or compute operations from the loop body. For some applications, several loops account for most of the time. Then the loops can be perturbed in different ways that force certain resources to be exercised further. For example, the registers in each instruction are scrambled so that the dependency graph is changed to either increase or decrease the ILP while the instruction types themselves are unchanged.

Friday, August 28, 2015

Repost: Incentivizing Active Learning in the Computer Science Classroom

Studies have shown that using active learning techniques improve student learning and engagement. Anecdotally, students have brought up these points to me from my use of such techniques. I even published at SIGCSE a study on using active learning, between undergraduate and graduate students. This study brought up an interesting point, that I will return to shortly, that undergraduate students prefer these techniques more than graduate students.

Mark Guzdial, far more senior than me, recently challenged Georgia Tech (where we both are) to incentivize the adoption of active learning. One of his recent blog posts lists the pushback he received, Active Learning in Computer Science. Personally, as someone who cares about the quality of my teaching, I support these efforts although I do not get to vote.

Faculty members at R1 institutions, such as Georgia Tech, primarily spend their time with research; however, they are not research scientists and therefore they are being called upon to teach. And so you would expect that they would do this well. In meeting with faculty candidates, there was one who expressed that the candidate's mission as a faculty member would be to create new superstar researchers. Classes were irrelevant to this candidate as a student, therefore there would be no need to teach well as this highest end (telos) of research justifies the sole focus on students who succeed despite their instruction, just like the candidate did. Mark's blog post suggests that one day Georgia Tech or other institutions may be sued for this sub-par teaching.

What about engagement? I (along with many students and faculty) attended a visiting speaker talk earlier this week and was able to pay attention to the hour long talk even though it was effectively a lecture. And for this audience, it was a good talk. The audience then has the meta-takeaway that lectures can be engaging, after all we paid attention. But we are experts in this subject! Furthermore, for most of us there, this is our subfield of Computer Science. Of course we find it interesting, we have repeatedly chosen to study it.

For us, the material we teach has become self-evidently interesting. I return to the undergraduate and graduate students that I taught. Which group is closer to being experts? Who has more experience learning despite the teaching? Who prefered me to just lecture? And in the end, both groups learned the material better.

Edit: I am by no means condemning all of the teaching at R1's or even Georgia Tech. There are many who teach and work on teaching well. The Dean of the College of Computing has also put some emphasis on this through teaching evaluations. Mark's post was partially noting that teaching evaluations are not enough, we can and should do more.

Mark Guzdial, far more senior than me, recently challenged Georgia Tech (where we both are) to incentivize the adoption of active learning. One of his recent blog posts lists the pushback he received, Active Learning in Computer Science. Personally, as someone who cares about the quality of my teaching, I support these efforts although I do not get to vote.

Faculty members at R1 institutions, such as Georgia Tech, primarily spend their time with research; however, they are not research scientists and therefore they are being called upon to teach. And so you would expect that they would do this well. In meeting with faculty candidates, there was one who expressed that the candidate's mission as a faculty member would be to create new superstar researchers. Classes were irrelevant to this candidate as a student, therefore there would be no need to teach well as this highest end (telos) of research justifies the sole focus on students who succeed despite their instruction, just like the candidate did. Mark's blog post suggests that one day Georgia Tech or other institutions may be sued for this sub-par teaching.

What about engagement? I (along with many students and faculty) attended a visiting speaker talk earlier this week and was able to pay attention to the hour long talk even though it was effectively a lecture. And for this audience, it was a good talk. The audience then has the meta-takeaway that lectures can be engaging, after all we paid attention. But we are experts in this subject! Furthermore, for most of us there, this is our subfield of Computer Science. Of course we find it interesting, we have repeatedly chosen to study it.

For us, the material we teach has become self-evidently interesting. I return to the undergraduate and graduate students that I taught. Which group is closer to being experts? Who has more experience learning despite the teaching? Who prefered me to just lecture? And in the end, both groups learned the material better.

Edit: I am by no means condemning all of the teaching at R1's or even Georgia Tech. There are many who teach and work on teaching well. The Dean of the College of Computing has also put some emphasis on this through teaching evaluations. Mark's post was partially noting that teaching evaluations are not enough, we can and should do more.

Monday, August 17, 2015

Course Design Series (Post 2 of N): Choosing a Textbook

Having now read both Programming Language Pragmatics, Third Edition and Concepts of Programming Languages (11th Edition), I have settled on the former as my textbook for the fall. I do not find either book ideally suited, and I wish that the Fourth Edition was being released this summer and not in November, which is why it still hasn't arrived.

For the choice of which textbook to use, it was "Concepts" to lose and having 11 editions, the text should also be better revised. I dislike reading the examples in a book and questioning how the code would compile. Beyond which, the examples felt quaint and contrived.

(edit) For example, I was reviewing the material on F# and copied in an example from the text:

let rec factorial x =

if x <= 1 then 1

else n * factorial(n-1)

Does anyone else notice that the function parameter is x on the first two lines and n on the last?!

Before the Concepts book is written off entirely, there are many valuable aspects. I enjoyed reading about the history of programming languages, especially for exposing me to Plankalkül. The work also took a valuable track in the subject by regularly looking at the trade-offs between different designs and features. This point certainly helped inform my teaching of the material.

Fundamentally, when I looked at the price of the two textbooks, the benefits of using the newer Concepts textbook could not outweigh the nearly doubled pricetag. Most of the positive points are small things and can be covered as addendums to the material.

(FCC note - Concepts of Programming Languages was provided free to me by the publisher.)

For the choice of which textbook to use, it was "Concepts" to lose and having 11 editions, the text should also be better revised. I dislike reading the examples in a book and questioning how the code would compile. Beyond which, the examples felt quaint and contrived.

(edit) For example, I was reviewing the material on F# and copied in an example from the text:

let rec factorial x =

if x <= 1 then 1

else n * factorial(n-1)

Does anyone else notice that the function parameter is x on the first two lines and n on the last?!

Before the Concepts book is written off entirely, there are many valuable aspects. I enjoyed reading about the history of programming languages, especially for exposing me to Plankalkül. The work also took a valuable track in the subject by regularly looking at the trade-offs between different designs and features. This point certainly helped inform my teaching of the material.

Fundamentally, when I looked at the price of the two textbooks, the benefits of using the newer Concepts textbook could not outweigh the nearly doubled pricetag. Most of the positive points are small things and can be covered as addendums to the material.

(FCC note - Concepts of Programming Languages was provided free to me by the publisher.)

Wednesday, May 13, 2015

Course Design Series (Post 1 of N): Why Study Programming Languages

Obviously, there are some practitioners and researchers who base their living on programming language design. But what of the rest of us? The Communications of the ACM ran a short article on why: Teach foundational language principles. Language design is increasingly focused on programmer productivity and correctness. As programmers, are we aware of the new features and programming paradigms?

Particularly, let's look at three aspects of programming languages: contracts, functional languages, and type systems. By introducing each to budding programmers, we improve their habits, just as presently I include comments and other style components in project grades. First, contracts provide a well defined mechanism for specifying the requirements of each component in a system. Not just commenting each component and interface, but doing so in a manner that permits the compiler / runtime system to verify and enforce it.

Functional languages are well known, and whether or not you may ever use one, they teach valuable techniques and mental models regarding programming. A programmer should use pure and side-effect free procedures, rather than interleaving logic across different components. Other practices, such as unit testing, also trend toward clean interfaces; however, being forced to obey these rules via the underlying language is great practice.

Type systems are treated by the authors as a panacea, as they call for language designers to be "educated in the formal foundations of safe programming languages - type systems." Panacea or not, reasoning about functionality within the bounds of types leads to code that is clearer and more maintainable. Even in a weak language, such as C, one can use enums rather than everything being "int".

As these aspects are being increasingly used in various forms in current language design, programmers need to be knowledgeable of them in order to be effective and use the languages to their full potential. It is therefore incumbent on me, and other educators, to appropriately include these aspects when we teach about programming languages.

Which leads me back to writing a course description and learning objectives for my fall course. Maybe later this month once I figure out why one of the textbooks still hasn't arrived.

Particularly, let's look at three aspects of programming languages: contracts, functional languages, and type systems. By introducing each to budding programmers, we improve their habits, just as presently I include comments and other style components in project grades. First, contracts provide a well defined mechanism for specifying the requirements of each component in a system. Not just commenting each component and interface, but doing so in a manner that permits the compiler / runtime system to verify and enforce it.

Functional languages are well known, and whether or not you may ever use one, they teach valuable techniques and mental models regarding programming. A programmer should use pure and side-effect free procedures, rather than interleaving logic across different components. Other practices, such as unit testing, also trend toward clean interfaces; however, being forced to obey these rules via the underlying language is great practice.

Type systems are treated by the authors as a panacea, as they call for language designers to be "educated in the formal foundations of safe programming languages - type systems." Panacea or not, reasoning about functionality within the bounds of types leads to code that is clearer and more maintainable. Even in a weak language, such as C, one can use enums rather than everything being "int".

As these aspects are being increasingly used in various forms in current language design, programmers need to be knowledgeable of them in order to be effective and use the languages to their full potential. It is therefore incumbent on me, and other educators, to appropriately include these aspects when we teach about programming languages.

Which leads me back to writing a course description and learning objectives for my fall course. Maybe later this month once I figure out why one of the textbooks still hasn't arrived.

Friday, April 17, 2015

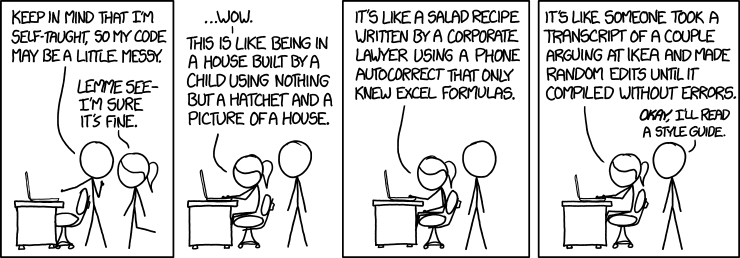

Repost: Code Quality

xkcd had a great comic today about the code quality of self-taught programmers. While there are technically trained programmers that write poor quality code, my impression is that this is more common with self-taught programmers as well as programmers who are only taught programming itself. Basically as part of the technical training, someone learns more than just programming. They learn about CS theory, data structures, multiple programming languages, and are more often exposed to well written / designed programs. Learning to program at a high quality is similar to learning a natural language, in that you study grammar and spelling, you read great works of literature and analyze them, and you practice reading / writing / speaking.

Each month I understand better what it takes to be competent in my field and also respect more the idea that curriculum is established by experts for a purpose, whether or not I may like certain topics.

Each month I understand better what it takes to be competent in my field and also respect more the idea that curriculum is established by experts for a purpose, whether or not I may like certain topics.

Thursday, April 2, 2015

Course Design Series (Post 0 of N): A New Course

This fall I will again be Instructor of Record. My appointment is to teach CS 4392 - Programming Languages. This is an unusual situation in that the course has not been taught for over 5 years (last time was Fall 2009). Effectively, the course will have to be designed afresh.

The first step in course design (following L. Dee Fink and McKeachie's Teaching Tips: Chapter 2) is to write the learning objectives using the course description, along with prerequisites and courses that require this one. Let's review what we have:

Course Description: none

Prerequisites:

Undergraduate Semester level CS 2340 (which has the following description)

Object-oriented programming methods for dealing with large programs. Focus on quality processes, effective debugging techniques, and testing to assure a quality product.

Courses depending on this: none

Alright. Now I will turn to the CS curriculum at Georgia Tech. Georgia Tech uses a concept they call "threads", which are sets of related courses. CS 4392 is specifically in the Systems and Architecture thread. This provides several related courses:

CS 4240 - Compilers, Interpreters, and Program Analyzers

Study of techniques for the design and implementation of compilers, interpreters, and program analyzers, with consideration of the particular characteristics of widely used programming languages.

CS 6241 - Compiler Design

Design and implementation of modern compilers, focusing upon optimization and code generation.

CS 6390 - Programming Languages

Design, structure, and goals of programming languages. Object-oriented, logic, functional, and traditional languages. Semantic models. Parallel programming languages.

Finally, the ACM has provided guidelines for the CS curriculum. Not only does this provide possible options for what material I should include, but they have also provided several ACM exemplar courses (c.f., http://www.cs.rochester.edu/

To summarize, if my first step is to write the learning objectives, then I am on step 0: write the course description. In a couple of weeks, I plan on finishing my initial review of potential textbooks as well as the other materials covered above. That will provide me the groundwork for the description and then objectives.

The first step in course design (following L. Dee Fink and McKeachie's Teaching Tips: Chapter 2) is to write the learning objectives using the course description, along with prerequisites and courses that require this one. Let's review what we have:

Course Description: none

Prerequisites:

Undergraduate Semester level CS 2340 (which has the following description)

Object-oriented programming methods for dealing with large programs. Focus on quality processes, effective debugging techniques, and testing to assure a quality product.

Courses depending on this: none

Alright. Now I will turn to the CS curriculum at Georgia Tech. Georgia Tech uses a concept they call "threads", which are sets of related courses. CS 4392 is specifically in the Systems and Architecture thread. This provides several related courses:

CS 4240 - Compilers, Interpreters, and Program Analyzers

Study of techniques for the design and implementation of compilers, interpreters, and program analyzers, with consideration of the particular characteristics of widely used programming languages.

CS 6241 - Compiler Design

Design and implementation of modern compilers, focusing upon optimization and code generation.

CS 6390 - Programming Languages

Design, structure, and goals of programming languages. Object-oriented, logic, functional, and traditional languages. Semantic models. Parallel programming languages.

Finally, the ACM has provided guidelines for the CS curriculum. Not only does this provide possible options for what material I should include, but they have also provided several ACM exemplar courses (c.f., http://www.cs.rochester.edu/

To summarize, if my first step is to write the learning objectives, then I am on step 0: write the course description. In a couple of weeks, I plan on finishing my initial review of potential textbooks as well as the other materials covered above. That will provide me the groundwork for the description and then objectives.

Monday, February 23, 2015

Compilers and Optimizations, should you care?

Compiler optimizations matter. One time in helping a fellow Ph.D. student improve a simulator's performance, I did two things: first, I replaced an expensive data structure with a more efficient one. Second, I turned on compiler optimizations. Together, the simulator ran 100x faster.

A question posted in the stackexchange system asked, "Why are there so few C compilers?" The main answer pointed out that any C compiler needs to be optimizing. Lots of optimizations are occurring on every compilation, and each one gaining tiniest increments in performance. While I enjoy discussing them in detail, I generally wave my hands and tell of how they are good, yet make debugging difficult. These optimizations are lumped together as the "optimization level".

In "What Every Programmer Should Know About Compiler Optimizations", we revisit optimizations. First, the compiler is no panacea and cannot correct for inefficient algorithms or poor data structure choices (although I am party to research on the later). The article then suggests four points to assist the compiler in its efforts at optimizing the code.

"Write understandable, maintainable code." Please do this! Usually, the expensive resource is the programmer. So the first optimization step is to improve the programmer's efficiency with the source code. Remember Review: Performance Anti-patterns and do not start optimizing the code until you know what is slow.

"Use compiler directives." Scary. Excepting the inline directive, I have used these less than a half dozen times in almost as many years of performance work. Furthermore, the example of changing the calling convention is less relevant in 64-bit space where most conventions have been made irrelevant.

"Use compiler-intrinsic functions." (see Compiler Intrinsics the Secret Sauce) This can often dovetail with the first point by removing ugly bit twiddling code and putting in clean function calls.

"Use profile-guided optimization (PGO)." This optimization is based on the dynamic behavior of the program. Meaning that if you take a profile of the program doing X, and later the program does Y; executing Y can be slower. The key is picking good, representative examples of the program's execution.

So you have dialed up the optimization level, and written understandable code sprinkled with intrinsics. Now what? The next step (with which I agree) is to use link time optimizations (LTO) / Link-Time Code Generation (LTCG). This flag delays many optimizations until the entire program is available to be linked. One of the principles of software performance is that the more of the program available to be optimized, the better it can be optimized. (This principle also applies in computer architecture). Thus, by delaying many optimization until the entire program is available, the linker can find additional and better opportunities than were present in individual components.

The article notes, "The only reason not to use LTCG is when you want to distribute the resulting object and library files." And alas, I have fought several battles to overcome this point, as my work requires the use of LTO. Perhaps in the next decade, LTO will be standard.

A question posted in the stackexchange system asked, "Why are there so few C compilers?" The main answer pointed out that any C compiler needs to be optimizing. Lots of optimizations are occurring on every compilation, and each one gaining tiniest increments in performance. While I enjoy discussing them in detail, I generally wave my hands and tell of how they are good, yet make debugging difficult. These optimizations are lumped together as the "optimization level".

In "What Every Programmer Should Know About Compiler Optimizations", we revisit optimizations. First, the compiler is no panacea and cannot correct for inefficient algorithms or poor data structure choices (although I am party to research on the later). The article then suggests four points to assist the compiler in its efforts at optimizing the code.

"Write understandable, maintainable code." Please do this! Usually, the expensive resource is the programmer. So the first optimization step is to improve the programmer's efficiency with the source code. Remember Review: Performance Anti-patterns and do not start optimizing the code until you know what is slow.

"Use compiler directives." Scary. Excepting the inline directive, I have used these less than a half dozen times in almost as many years of performance work. Furthermore, the example of changing the calling convention is less relevant in 64-bit space where most conventions have been made irrelevant.

"Use compiler-intrinsic functions." (see Compiler Intrinsics the Secret Sauce) This can often dovetail with the first point by removing ugly bit twiddling code and putting in clean function calls.

"Use profile-guided optimization (PGO)." This optimization is based on the dynamic behavior of the program. Meaning that if you take a profile of the program doing X, and later the program does Y; executing Y can be slower. The key is picking good, representative examples of the program's execution.

So you have dialed up the optimization level, and written understandable code sprinkled with intrinsics. Now what? The next step (with which I agree) is to use link time optimizations (LTO) / Link-Time Code Generation (LTCG). This flag delays many optimizations until the entire program is available to be linked. One of the principles of software performance is that the more of the program available to be optimized, the better it can be optimized. (This principle also applies in computer architecture). Thus, by delaying many optimization until the entire program is available, the linker can find additional and better opportunities than were present in individual components.

The article notes, "The only reason not to use LTCG is when you want to distribute the resulting object and library files." And alas, I have fought several battles to overcome this point, as my work requires the use of LTO. Perhaps in the next decade, LTO will be standard.

Labels:

article,

C,

C++,

compilers,

link,

LTO,

patterns,

performance,

stackoverflow

Wednesday, February 18, 2015

Going ARM (in a box)

ARM, that exciting architecture, is ever more available for home development. At first, I was intrigued by the low price points of a Raspberry Pi. And I have one, yet I felt the difficulties of three things: the ARMv6 ISA, the single core, and 512MB of RAM. For my purposes, NVIDIA's development board served far better. At present, that board is no longer available on Amazon; however, I have heard rumors of a 64-bit design being released soon.

With the release of the Raspberry Pi 2, many of my concerns have been allayed. I am also intrigued by the possibility it offers of running Windows 10.

With the release of the Raspberry Pi 2, many of my concerns have been allayed. I am also intrigued by the possibility it offers of running Windows 10.

Monday, December 8, 2014

Improving Computer Science Education

Recently in the news are two articles that relate to improving Computer Science Education. It is valuable to broaden the base of students who understand the basics of computing, just as students are expected to know Chemistry or Calculus. In fact in my biased opinion, knowing the basics of computing and programming will have greater practical benefit to students; however, it should never be at the expense of a diverse education.

The White House announced that the 7 largest school districts will be including Computer Science in their curriculum. This will quickly lead to another problem of who will teach the Computer Science classes. Not me, but I am interested in teaching the teachers. I do want to see Computer Science as an actual specialty (endorsement) for Education majors.

Another aspect of broadening the base is retaining students enrolled in the major. Being part of the majority, it is difficult for me to know the challenges faced by other groups. Similarly, I know why I entered Computer Science, so I would like to understand why others have too. Why are they passionate or interested in this field that I am a part of? Here are some things minority students have to say about STEM.

The White House announced that the 7 largest school districts will be including Computer Science in their curriculum. This will quickly lead to another problem of who will teach the Computer Science classes. Not me, but I am interested in teaching the teachers. I do want to see Computer Science as an actual specialty (endorsement) for Education majors.

Another aspect of broadening the base is retaining students enrolled in the major. Being part of the majority, it is difficult for me to know the challenges faced by other groups. Similarly, I know why I entered Computer Science, so I would like to understand why others have too. Why are they passionate or interested in this field that I am a part of? Here are some things minority students have to say about STEM.

Wednesday, November 26, 2014

Computer Science Diversity

I attended a top program in Computer Science, where the gender split was 60 / 40. Then I worked for five years at a major company. Therefore, my expectation is always that anyone regardless of their gender, race, etc can succeed in Computer Science. Now, recently there was a short lived Barbie book about being a computer engineer. Ignoring any semantics about Computer Science, Software Engineering, and Computer Engineering being different disciplines, the work still did not portray women in the more technical efforts. I'd rather read a collegue's remix of the work.

In a different vein of diversity, as a white male, I have been regularly been excluded from tech events because I dislike the taste of alcohol. Thus at the (especially) frequent events in industry settings where alcohol is served, I was not socializing with my colleagues, and instead would inevitably find myself back at my desk working. As a consequence, I was effectively excluded from the event. And now in academia, I find myself attending conferences, where the social events are also touted for serving alcohol. I have no issue with serving alcohol, rather it is the near exclusivity of which the drink options trend that way. Thus a recent article struck a chord in the continuing desirability of extending the options and respecting the decision (for whatever reason) to not drink alcohol.

In a different vein of diversity, as a white male, I have been regularly been excluded from tech events because I dislike the taste of alcohol. Thus at the (especially) frequent events in industry settings where alcohol is served, I was not socializing with my colleagues, and instead would inevitably find myself back at my desk working. As a consequence, I was effectively excluded from the event. And now in academia, I find myself attending conferences, where the social events are also touted for serving alcohol. I have no issue with serving alcohol, rather it is the near exclusivity of which the drink options trend that way. Thus a recent article struck a chord in the continuing desirability of extending the options and respecting the decision (for whatever reason) to not drink alcohol.

Labels:

career,

conference,

culture,

discussion,

diversity,

link

Tuesday, September 16, 2014

Atomic Weapons in Programming

In parallel programming, most of the time the use of locks is good enough for the application. And when it is not, then you may need to resort to atomic weapons. While I can and have happily written my own lock implementations, its like the story of a lawyer redoing his kitchen himself. It is not a good use of the lawyer's time unless he's enjoying it.

That said, I have had to use atomic weapons against a compiler. The compiler happily reordered several memory operations in an unsafe way. Using fence instructions, I was able to prevent this reordering, while not seeing fences in the resulting assembly. I still wonder if there was some information I was not providing.

Regardless, the weapons are useful! And I can thank the following presentation for illuminating me to the particular weapon that was needed, Atomic Weapons. I have reviewed earlier work by Herb Sutter and he continues to garner my respect (not that he is aware), but nonetheless I suggest any low-level programmer be aware of the tools that are available, as well as the gremlins that lurk in these depths and might necessitate appropriate weaponry.

That said, I have had to use atomic weapons against a compiler. The compiler happily reordered several memory operations in an unsafe way. Using fence instructions, I was able to prevent this reordering, while not seeing fences in the resulting assembly. I still wonder if there was some information I was not providing.

Regardless, the weapons are useful! And I can thank the following presentation for illuminating me to the particular weapon that was needed, Atomic Weapons. I have reviewed earlier work by Herb Sutter and he continues to garner my respect (not that he is aware), but nonetheless I suggest any low-level programmer be aware of the tools that are available, as well as the gremlins that lurk in these depths and might necessitate appropriate weaponry.

Subscribe to:

Posts (Atom)